Public Cloud

Private Cloud

Datacentres

Marketplace

Public Cloud

On demand GPU compute and inference services.

Get started

Serverless

API endpoints for instant and scalable Generative AI inference.

Contact

Docs

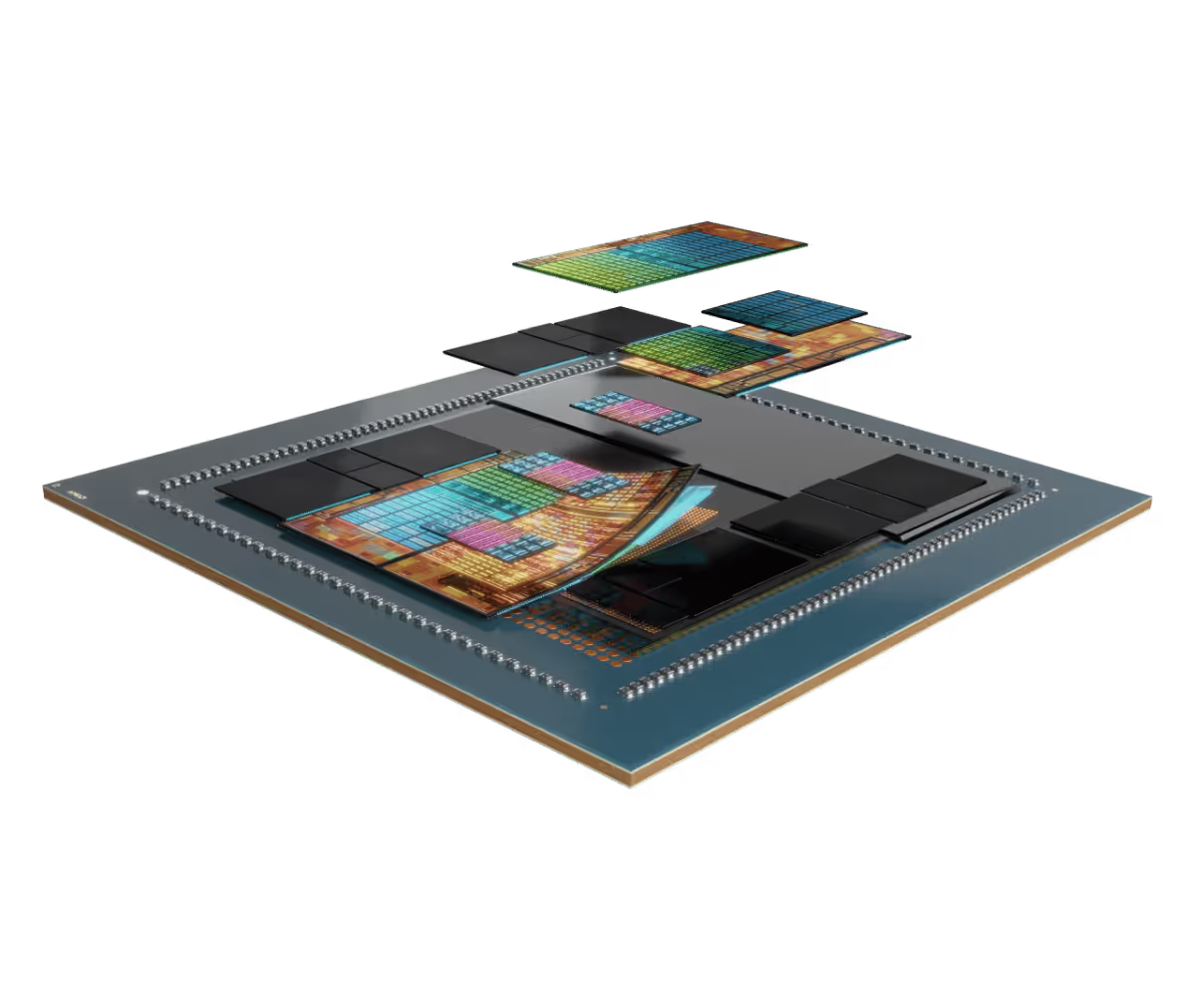

AMD Instinct™ MI300 Series accelerators are uniquely suited to power even the most demanding AI and HPC workloads, delivering exceptional compute performance, massive memory density, high-bandwidth memory, and support for specialised data formats.

Reduce costs, grow revenue, and run your AI workloads more efficiently on a fully integrated platform. Whether you're using Nscale's built-in AI/ML tools or your own, our platform is designed to simplify the journey from development to production.